EDIT :

⚠️⚠️⚠️ This article describes a test, please visit the official repository : SimpleWebXR for Unity ⚠️⚠️⚠️

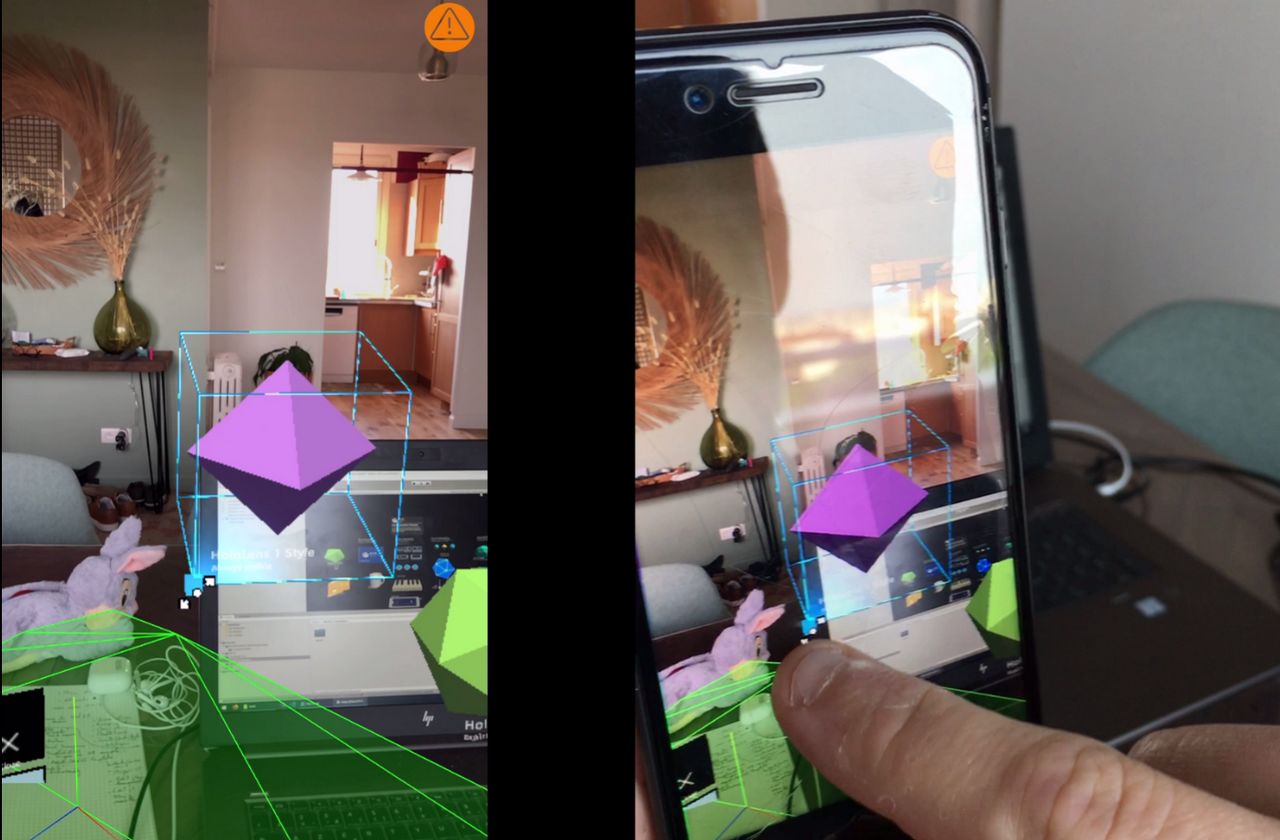

This article describes how I add the WebXR target to the MRTK, allowing to do augmented reality in the web browser. This project is a proof of concept and not an industrial implementation ! I developed this demonstration over a long weekend in confinement.

Associated Github repository : https://github.com/Rufus31415/MixedRealityToolkit-Unity-WebXR

Live demo (please use WebXR Viewer browser app on iOS) : https://rufus31415.github.io/sandbox/webxr-hand-interaction/

|

|

|

|

|

|

What is the Mixed Reality Toolkit ?

MRTK-Unity is a Microsoft-driven project that provides a set of components and features, used to accelerate cross-platform MR app development in Unity. Here are some of its functions:

- Provides the basic building blocks for Unity development on HoloLens, Windows Mixed Reality, and OpenVR.

- Enables rapid prototyping via in-editor simulation that allows you to see changes immediately.

- Operates as an extensible framework that provides developers the ability to swap out core components.

- Supports a wide range of platforms, including

- Microsoft HoloLens

- Microsoft HoloLens 2

- Windows Mixed Reality headsets

- OpenVR headsets (HTC Vive / Oculus Rift) read more…

What is WebXR ?

The WebXR Device API provides access to input and output capabilities commonly associated with Virtual Reality (VR) and Augmented Reality (AR) devices. It allows you develop and host VR and AR experiences on the web. Today (May 2020), few browsers are currently WebXR compatible. read more…

What is Unity ?

Unity is a 3D rendering engine and development environment to create video games, but also 3D experiences of augmented or virtual reality. It provides applications for Windows, MacOS, Linux, Playstation, Xbox, Hololens, WebGL…

WebGL is here the export format used.

What is WebGL ?

WebGL allows to dynamically display, create and manage complex 3D graphical elements in a client’s web browser. Most desktop and mobile browsers are now compatible with WebGL.

How do you mix all these technologies?

MRTK is developed in Unity. I used here the example scene Hand Interaction. I compiled it into WebGL with Unity, so I got javascript (as WebAssembly). Since Safari is not yet compatible with WebXR under iOS (I don’t have an Android phone), I had to use the XRViewer browser developed by Mozilla. However, the implementation of WebXR is not standard because it was a test application. (read more about XRViewer app…. I was inspired by the examples provided by Mozilla. I added the generated WebGL component on top of it. I use the WebXR javascript API to transmit the position of the smartphone to the Unity Main Camera.

Moreover, according to the Mozilla examples, I use Three.js to display the world mapping mesh (in green).

Touch screen

A new Input System Profile has been created to support multitouch as well as the mouse pointer. See MyMixedRealityInputSystemProfile.asset and MyMixedRealityHandTrackingProfile.asset.

WebGL canvas transparent background

The WebGL canvas is placed on top of the video canvas. The black background normally generated by Unity must be made transparent in order to view the video behind the holograms.

For this, a new Camera Profile has been created with a solid color (black background) and 100% transparency.

In addition, a jslib plugin has been written to apply this transparency which is not supported by default in WebGL.

var LibraryGLClear = {

glClear: function(mask) {

if (mask == 0x00004000) {

var v = GLctx.getParameter(GLctx.COLOR_WRITEMASK);

if (!v[0] && !v[1] && !v[2] && v[3]) return;

}

GLctx.clear(mask);

}

};

mergeInto(LibraryManager.library, LibraryGLClear);

Finally, after generation, the Build/build.json file must be modified to replace "backgroundColor": "#000000" by "backgroundColor": "transparent". This operation could be done automatically in post-build.

HTML

A WebGL export template is used to generate the web page to get the final application. This template was inspired by the World Sensing example from Mozilla. It manages the display of the video, the start of the WebXR session and the display in green of the mesh from the world scanning. I added on the video the WebGL canvas generated by Unity.

Communication between WebXR and Unity

The position of the smartphone is in a Camera Three.js object. It is recovered in loop and transmitted to the Unity camera with the following code:

var pose = new THREE.Vector3();

var quaternion = new THREE.Quaternion();

var scale = new THREE.Vector3();

engine.camera.matrix.decompose(pose, quaternion, scale);

var coef = 1/0.4; // Three.js to Unity length scale

var msg = {x: pose.x * coef, y: pose.y * coef, z: -pose.z * coef, _x: quaternion._x, _y: quaternion._y, _z: quaternion._z, _w: quaternion._w, crx: -1, cry: -1, crz: 1, fov:40};

var msgStr = JSON.stringify(msg);

unityInstance.SendMessage("JSConnector", "setCameraTransform", msgStr); // send message to Unity (only 1 argument possible)

public void setCameraTransform(string msgStr) {

var msg = JsonUtility.FromJson<JSCameraTransformMessage>(msgStr);

Camera.main.transform.position = new Vector3(msg.x, msg.y, msg.z);

var quat = new Quaternion(msg._x, msg._y, msg._z, msg._w);

var euler = quat.eulerAngles;

Camera.main.transform.rotation = Quaternion.Euler(msg.crx * euler.x, msg.cry * euler.y, msg.crz * euler.z);

Camera.main.fieldOfView = msg.fov;

}

Some settings, such as scale change and FOV are hard written and adapted to my iPhone 7 for this POC.

To be more efficient, the access to the WebXR API should be done in a jslib plugin so that the code is integrated to the WebGL build, this would avoid the heaviness of Three.js, the lags of the “sendMessage” function, and the decoding/encoding of json in string. Also, it would be necessary to think about how to transmit the good parameters of FOV and scaling to Unity. The current code is clearly inefficient, but it is a quick working proof of concept.

Compilation

WebGL compilation is performed by Unity using the IL2CPP scripting backend then the Emscripten WebAssembly compiler. Note that the ARSessionOrigin.cs file in the AR Foundation package was voluntarily committed because it includes modifications to be compilable in WebGL.

As mentioned above, after compilation, the Builb/build.json file must be modified for supporting transparency.

Finally, to avoid warnings at runtime about browser incompatibility, the code generated in UnityLoader.js should be modified by replacing: UnityLoader.SystemInfo.mobile by false and ["Edge", "Firefox", "Chrome", "Safari"].indexOf(UnityLoader.SystemInfo.browser)==-1 by false.

Browser compatibility

To date (May 2020), the XRViewer application is the only browser that offers a WebXR implementation on iOS (Safari is not yet compatible). But this implementation is a draft as explained here. It would not be complicated to make this example compatible with Chrome for Android, or Chrome or Firefox for desktop. This would allow to generate Unity scenes compatible with Android and virtual reality devices like WMR, Oculus, OpenVR, or Vive. Maybe I’ll work on it if needed 😀.

If you are using a browser other than XRViewer, you will get an error popup indicating that WebXR is not supported. But you can then enjoy the WebGL scene with the mouse.

With another browser :

With XRViewer :